Giuseppe Averta, Matilde Iuculano, Paolo Salaris, and Matteo Bianchi

IEEE Transactions on Human-Machine Systems

Abstract

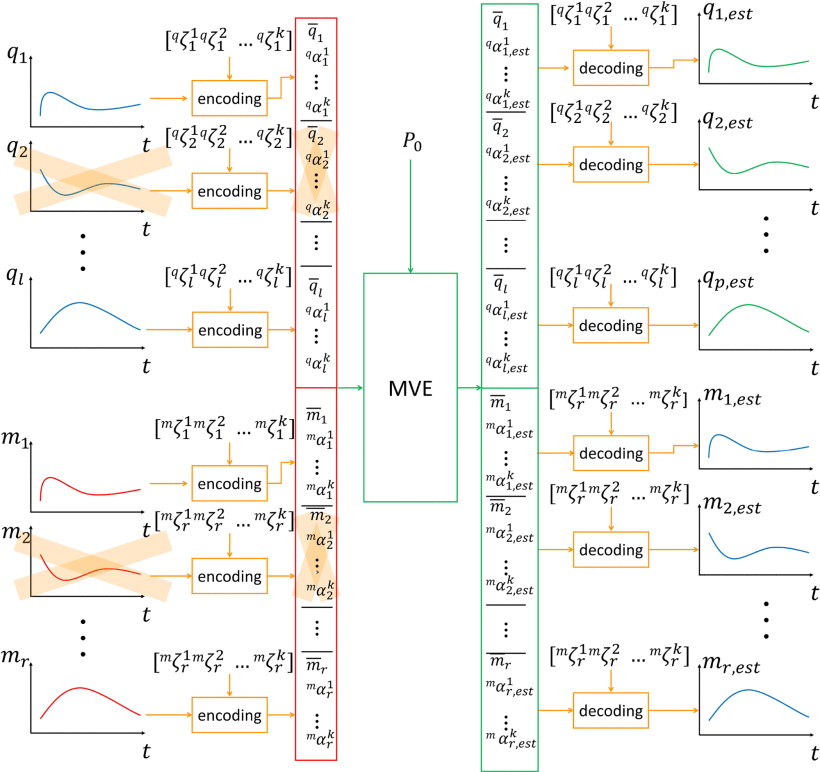

Wearable sensing has emerged as a promising solution for enabling unobtrusive and ergonomic measurements of the human motion. However, the reconstruction performance of these devices strongly depends on the quality and the number of sensors, which are typically limited by wearability and economic constraints. A promising approach to minimize the number of sensors is to exploit dimensionality reduction approaches that fuse prior information with insufficient sensing signals, through Minimum Variance Estimation. These methods were successfully used for static hand pose reconstruction, but their translation to motion reconstruction has not been attempted yet. In this work, we propose the usage of functional PCA to decompose multi-modal, time-varying motion profiles in terms of linear combinations of basis functions. Functional decomposition enables the estimation of the a priori covariance matrix, and hence the fusion of scarce and noisy measured data with a priori information. We also consider the problem of identifying which elemental variables to measure as the most informative for a given class of tasks. We applied our method to two different datasets of upper limb motion D1 (joint trajectories) and D2 (joint trajectories + EMG data) considering an optimal set of measures (four joints for D1 out of seven, three joints and eight EMGs for D2 out of seven and twelve respectively). We found that our approach enables the reconstruction of upper limb motion with a median error of 0.013±0.006 rad for D1 (relative median error 0.9%), and 0.038±0.023 rad and 0.003±0.002 mV for D2 (relative median error 2.9% and 5.1% respectively).

@ARTICLE{averta2022optimal,

author={Averta, Giuseppe and Iuculano, Matilde and Salaris, Paolo and Bianchi, Matteo},

journal={IEEE Transactions on Human-Machine Systems},

title={Optimal Reconstruction of Human Motion From Scarce Multimodal Data},

year={2022},

volume={52},

number={5},

pages={833-842},

doi={10.1109/THMS.2022.3163184}}