Sebastian Koch, Johanna Wald, Mirco Colosi, Narunas Vaskevicius, Pedro Hermosilla, Federico Tombari and Timo Ropinski

Proceedings of IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 2025.

Abstract

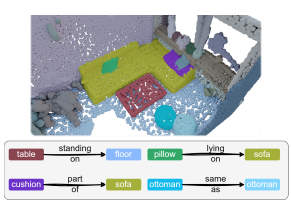

Neural radiance fields are an emerging 3D scene representation and recently even been extended to learn features for scene understanding by distilling open-vocabulary features from vision-language models. However, current method primarily focus on object-centric representations, supporting object segmentation or detection, while understanding semantic relationships between objects remains largely unexplored. To address this gap, we propose RelationField, the first method to extract inter-object relationships directly from neural radiance fields. RelationField represents relationships between objects as pairs of rays within a neural radiance field, effectively extending its formulation to include implicit relationship queries. To teach RelationField complex, open-vocabulary relationships, relationship knowledge is distilled from multi-modal LLMs. To evaluate RelationField, we solve open-vocabulary 3D scene graph generation tasks and relationship-guided instance segmentation, achieving state-of-the-art performance in both tasks.

@misc{koch2025relationfieldrelateradiancefields,

title={RelationField: Relate Anything in Radiance Fields},

author={Sebastian Koch and Johanna Wald and Mirco Colosi and Narunas Vaskevicius and Pedro Hermosilla and Federico Tombari and Timo Ropinski},

year={2025},

eprint={2412.13652},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2412.13652},

}