Sariah Mghames, Luca Castri, Marc Hanheide, and Nicola Bellotto

Proceedings of the IEEE International Conference on Robot and Human Interactive Communication (RO-MAN)

Abstract

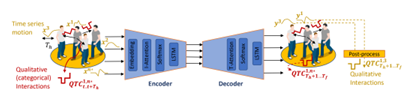

Deploying service robots in our daily life, whether in restaurants, warehouses or hospitals, calls for the need to reason on the interactions happening in dense and dynamic scenes. In this paper, we present and benchmark three new approaches to model and predict multi-agent interactions in dense scenes, including the use of an intuitive qualitative representation. The proposed solutions take into account static and dynamic context to predict individual interactions. They exploit an input- and a temporal-attention mechanism, and are tested on medium and long-term time horizons. The first two approaches integrate different relations from the so-called Qualitative Trajectory Calculus (QTC) within a state-of-the-art deep neural network to create a symbol-driven neural architecture for predicting spatial interactions. The third approach implements a purely data-driven network for motion prediction, the output of which is post-processed to predict QTC spatial interactions. Experimental results on a popular robot dataset of challenging crowded scenarios show that the purely data-driven prediction approach generally outperforms the other two. The three approaches were further evaluated on a different but related human scenarios to assess their generalisation capability.

@inproceedings{sariah2023qualitative,

title={Qualitative Prediction of Multi-Agent Spatial Interactions},

author={Sariah, Mghames and Luca, Castri and Marc, Hanheide and Bellotto, Nicola and others},

booktitle={Proceedings of the IEEE International Conference on Robot and Human Interactive Communication (RO-MAN)},

year={2023}

}