Dinh-Cuong Hoang, Johannes A. Stork, and Todor Stoyanov

IEEE International Conference on Robotics and Automation (ICRA)

Abstract

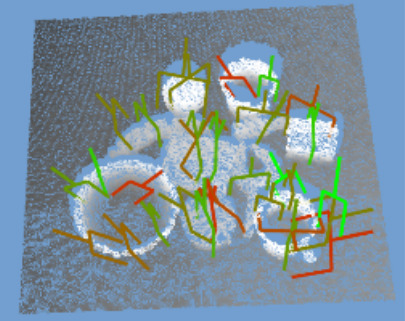

Conventional methods to autonomous grasping rely on a pre-computed database with known objects to synthesize grasps, which is not possible for novel objects. On the other hand, recently proposed deep learning-based approaches have demonstrated the ability to generalize grasp for unknown objects. However, grasp generation still remains a challenging problem, especially in cluttered environments under partial occlusion. In this work, we propose an end-to-end deep learning approach for generating 6-DOF collision-free grasps given a 3D scene point cloud. To build robustness to occlusion, the proposed model generates candidates by casting votes and accumulating evidence for feasible grasp configurations. We exploit contextual information by encoding the dependency of objects in the scene into features to boost the performance of grasp generation. The contextual information enables our model to increase the likelihood that the generated grasps are collision-free. Our experimental results confirm that the proposed system performs favorably in terms of predicting object grasps in cluttered environments in comparison to the current state of the art methods.

@inproceedings{hoang-2022-contextaware,

author = {Dinh-Cuong Hoang and Johannes A. Stork and Todor Stoyanov},

date = {2022},

journal = {IEEE International Conference on Robotics and Automation (ICRA)},

title = {Context-Aware Grasp Generation in Cluttered Scenes},

}