Tiago Rodrigues de Almeida, Tim Schreiter, Andrey Rudenko, Luigi Palmieri, Johannes A. Stork and Achim J. Lilienthal

20th edition of the ACM/IEEE International Conference on Human-Robot Interaction

Abstract

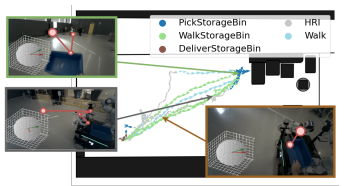

Accurate human activity and trajectory prediction are crucial for ensuring safe and reliable human-robot interactions in dynamic environments, such as industrial settings, with mobile robots. Datasets with fine-grained action labels for moving people in industrial environments with mobile robots are scarce, as most existing datasets focus on social navigation in public spaces. This paper introduces the THÖR-MAGNI Act dataset, a substantial extension of the THÖR-MAGNI dataset, which captures participant movements alongside robots in diverse semantic and spatial contexts. THÖR-MAGNI Act provides 8.3 hours of manually labeled participant actions derived from egocentric videos recorded via eye-tracking glasses. These actions, aligned with the provided THOR-MAGNI motion cues, follow a long-tailed distribution with diversified acceleration, velocity, and navigation distance profiles. We demonstrate the utility of THÖR-MAGNI Act for two tasks: action-conditioned trajectory prediction and joint action and trajectory prediction. We propose two efficient transformer-based models that outperform the baselines to address these tasks. These results underscore the potential of THÖR-MAGNI ¨ Act to develop predictive models for enhanced human-robot interaction in complex environments.

@misc{dealmeida2024thormagniactactionshuman,

title={TH\"OR-MAGNI Act: Actions for Human Motion Modeling in Robot-Shared Industrial Spaces},

author={Tiago Rodrigues de Almeida and Tim Schreiter and Andrey Rudenko and Luigi Palmieiri and Johannes A. Stork and Achim J. Lilienthal},

year={2024},

eprint={2412.13729},

archivePrefix={arXiv},

primaryClass={cs.RO},

url={https://arxiv.org/abs/2412.13729},

}